Long before the scientific method was formally articulated by Sir Frances Bacon in the 16th century, people were formulating theories to explain and predict natural phenomena. The Ptolemaic system of the ancient Greeks, designed to explain the movement of celestial objects, was both elegant and useful. Though it was later superseded by more the powerful Newtonian model, it was no less scientific.

Science works that way. A theory gives us some power over nature that we never had before, and then a new broader theory comes along that gives us even more power. It is that process that has allowed humans to gain an extraordinary level of control over nature.

But as long as people have been doing the science that gives us genuine control over nature, others have been taking short-cuts. These practice to create the appearance of control for their own purposes. They are the Illusionists.

Their purposes vary. Some are entertainers, but the skills of illusion also convey power, not over nature but over people. This power is multiplied if the audience doesn’t realize they are experiencing an illusion.

Illusion and Artificial Intelligence have been intertwined from early days. In 1964 Joseph Weizenbaum at the MIT Artificial Intelligence Laboratory created ELIZA, an early chatbot based on a pattern matching and substitution methodology. ELIZA simulated dialog patterns of the psychotherapist Carl Rogers who was well known for simply echoing back whatever a person just said. Weizenbaum had no intention to fool anyone and was shocked that many early users were convinced that the program had intelligence and understanding.[i]

This included Weizenbaum’s own secretary, who it was reported, asked him to leave the room on one occasion so she and ELIZA could have a private conversation. She was subsequently horrified to learn he had access to all the transcripts. Fifty years later we may smile at such naivete, but in fact, today the illusionists are still at work – and people are still falling for them.

Today’s crop of chatbots really haven’t come that far from ELIZA. It doesn’t take long for people to realize that they don’t understand a word we say to them. The first few interchanges may amaze us but after just a few minutes of “conversation” the spell is broken as we encounter some obviously “canned,” non-sequitur response that was obviously composed by a real person in a back room somewhere.

But there is another class of natural language applications called “language models” that, given a “seed input” by a human, can generate text output that appears so much like what a human would write that the illusion of comprehension is stunning.

GPT-3 is a third-generation program based on the “Transformer” deep learning algorithm developed by Google. [ii]

I wrote about GTP-3 in my previous post and cited the example of the rather charming “story” about the unicorns that “had perfectly coiffed hair and wore what appeared to be Dior makeup.”

The generated text in the example flows smoothly from the human input, has excellent grammar, style, and imagery – it even appears to be creative, who would think of unicorns wearing make-up? Who could read these words, knowing that they were entirely generated by a machine, and not be as amazed and dumbfounded as by the illusions of a stage magician of consummate skill?

But stage magicians generally admit to being illusionists. GPT-3 is the creation of an extravagantly well-financed company, Open AI, founded for the express purpose of creating Artificial General Intelligence. So how do we know it is an illusion? Let’s check out what is going on behind the scenes and see if we can find evidence of smoke and mirrors.

We start by examining language comprehension in people. While we have no idea how our brains do this at the bytes and bits level (or whatever the brain uses instead) we don’t need to. We need only to think through the functional steps that must occur to produce the end result. First and foremost, language is a communications protocol for recreating an idea that originates in one mind, in the mind of another. It works like this:

John: I saw a cisticola when I was in Africa.

Helen: What is a cisticola?

John: It’s a pretty little bird.

In an instant Helen knows that cisticolas have beaks, wings, and feathers, and many other properties that were not supplied in the statement. So, while we tend to think of language as a kind of package that encloses the knowledge to be communicated, a statement in natural language is just like one a computer language. It is an executable specification or instruction intended to be processed by a specific program.

Let’s look at the “articulation program” John uses to encode the idea into a language instruction:

First, he decomposes the concept denoted by the word “cisticola” in his mind into components concepts and selects certain ones that he guesses already exist in Helen’s mind. The key one is “bird” because if you classify cisticolas as birds a person will assign them all the properties common to all birds, creating a rich new conception.

Second, he selects a concept that will help Helen distinguish cisticolas from other birds, namely that they are comparatively small. He now has a conceptual parts list for the new concept to be constructed in Helen’s mind from items already available in her “cognitive warehouse:” bird, little, and pretty.

Third, he provides the assembly instructions: he chooses some connective words which provide the “syntactical glue” it, is, and a, then arranges all in grammatical order.

Upon receiving the message, Helen processes the language instruction:

First, she resolves the words to existing ideas (semantics)

Second, she connects them to form the new idea in accordance with the grammar and syntax of the specification.

This process is precisely what the words comprehension and understanding mean in the context of natural language.

We can verify that the transfer of knowledge was successful with a little more communication

John: Do cisticola have feathers?

Helen: Yes, they do.

John: What is a cisticola?

Helen: They are small birds. You think they are pretty.

Returning to the text generators, we find they process language in an entirely different way. They do not start with a “cognitive warehouse” of ideas when processing in input. Rather they process large datasets of text written by and for humans with a language model that enables them to, given words or phrases as an input, calculate other words and phrases are statistical matches for them as an output. Thus, it can generate texts that reflect statistical probabilities about word combinations extracted from language humans have composed.

Although these programs are often described as having language comprehension and understanding, they do not. Transformer-based programs never leave the domain of language, they intake language created by humans and output more language that has a resemblance to language creating by humans without making any attempt to understand either the input or the output. They are processing statements of executable code with a program that has no relation to the program those instructions were intended for and so do not produce the same result, which is comprehension.

When they process language written by humans, they have no internal model of realty to relate the words to, they do not acquire a new concept from the input that they can refer to later, not even a new kind of bird from a simple sentence. The core technology is called machine learning, but what they learn in the training process is to recognize patterns of words while humans learn new knowledge about reality from language. These two types of learning should not be confused (but commonly are.) Despite appearances, text output by transformers is not even communication because the programs did not start with an idea to be communicated in the first place any more than ELIZA did.

Where then, do the ideas that come into our minds when we read transformer generated text originate? As with all language comprehension most of its substance was already in our own minds and since the semantics and syntax are valid and the original sources the algorithm used were encoded by humans, the probability that the generated text can be successfully decoded by the human comprehension process is high. The knowledge that comes to us when we read transformer generated text is a kind of statistical amalgamation, the lowest common denominator of thoughts encoded by many people in language from the dataset. That is true for every intelligible thing that comes out of it even when the program appears to be creative – if you wonder where the idea of unicorns with hair-dos came from just google “my little unicorns.”

Until ELIZA no human had ever encountered intelligible natural language that was not communication from another intelligent being and so the belief that ELIZA was such a being was quite understandable in anyone who didn’t know otherwise. Once it was clear that every word that came from the program was composed by Dr. Weizenbaum, the spell was broken, the man behind the curtain was revealed.

Today, there is no man behind the curtain of the language transformers, at least no one person that can ever be identified and the illusion of communication is so compelling that we are seeing the “ELIZA phenomenon” from people who – well, would be expected to know better.

We invited an AI to debate its own ethics in the Oxford Union – what it said was startling

Headlines like this are now quite common. This one is from The Conversation, a journal which “arose out of deep-seated concerns for the fading quality of our public discourse and recognition of the vital role that academic experts could play in the public arena …”

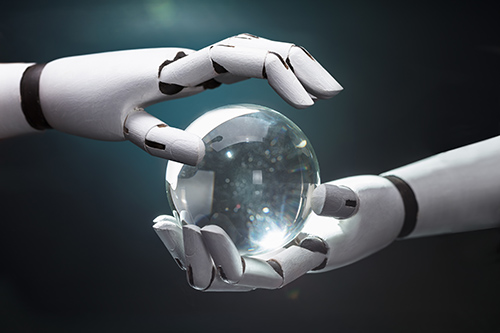

The title says the AI was “invited” to the debate as if were some visiting scholar and straight up asserts that it has its own ethical principles. Perhaps there is a bit of tongue-in-cheek here, but the entire article goes on in the same vein. It is a commentary on interactions with the Megatron Transformer that, as described in the article, was trained on the entire text of Wikipedia, 63 million English news articles from 2016-2019 and 38 gigabytes of Reddit discourse.

Here a couple of direct quotations from that commentary:

In other words, the Megatron is trained on more written material than any of us could reasonably expect to digest in a lifetime. After such extensive research, it forms its own views.

The Megatron was perfectly comfortable taking the alternative position to its own dystopian future-gazing, and was now painting a picture of an attractive late 21st century in which it played a key, but benign role.ws.

Megatron is referred to as if it is in fact a thinking entity with comprehension, knowledge and its own opinions. Again, perhaps the authors are playing with their readers, using irony and metaphor, knowing it is an illusion but pretending it is real to drive home what seems to be the point of the article as summed up in the final paragraph:

What we in turn can imagine is that AI will not only be the subject of the debate for decades to come – but a versatile, articulate, morally agnostic participant in the debate itself.

But this is anti-climactic since the whole tone of the article treats Megatron as if it already is “a versatile, articulate, morally agnostic” entity. Its outputs are discussed as if they were prognostications that we ought to take seriously, presumably because Megatron was “trained on more written material than any of us could reasonably expect to digest in a lifetime.” Yes, it was “trained” on it, but didn’t comprehend a single word.

Whatever the authors think, many people who read such articles clearly are taken in by the illusion of comprehension and communication and are convinced that these programs are thinking intelligences which if not already superior to humans they soon will be. ELIZA all over again but this time not just a few people in a lab – these articles are published on the Internet and read by millions.

Asking a text-generator any question more difficult than a simple matter of fact makes little more sense than asking the question of a carnival “psychic.” The “little more” comes from the fact that the output from a text generator has some connection to statistically “lowest common denominator” responses to what people in the training dataset said in response to similar questions or on similar topics.

This is not to say that text generators don’t have legitimate uses. People use them as a kind of superior grammar and style tool, particularly useful for people trying to express themselves in a language other than their native one. You can input what you are trying to say (to other humans) and you may get an output that you feel says it better than you could have yourself.

The technology is also being used to create superior chatbots in narrow domains. The algorithm is trained on a dataset that contains answers to relevant questions and the program may retrieve them in response to people’s questions with an acceptable degree of accuracy.

But there are pitfalls in all these applications. It may not matter how a machine processes inputs if the output is useful and appropriate but, as we are seeing across the whole spectrum of machine learning applications, this can never be guaranteed. People will be far more likely to recognize the mistakes these systems are prone to (and save themselves from the kind of embarrassment that Weizenbaum and his poor secretary suffered) when they appreciate that no matter how compelling the illusion of comprehension, they are interacting with a machine that has no idea what it is talking about, in fact has no ideas at all. Despite being called Artificial Intelligence – there is nothing there that we can recognize as intelligence as we recognize it in one another.

One might take from all this that the entire AI enterprise is a sham, a collection of useful tricks at best. But let’s not go there. First, machine learning is solving some amazingly difficult problems where finding statistical patterns in vast sets of data is key. Figuring out how protein molecules fold, pulling out the signature of distant planet in data from an orbiting telescope, identifying a tumor in a radiology image, are all wonderful applications and no illusion. (Although why these techniques should be called AI in the first place is an excellent question.)

Second, even if machine leaning can only give an illusion of comprehension, that does not mean it is impossible for machines. Computers already excel at pattern recognition, reasoning and memory utilization, all capabilities humans rely on for compensation. The trick is to give them something to think about and that is, knowledge of reality. The first wave of AI, the symbolic approach that ELIZA was based on, tried to represent knowledge as symbols but that failed to scale. The second wave, today’s connectionist approach that transformer text generators are based on, makes no attempt at all to create knowledge, though some people hope they may someday be elevated to do so.

A third wave, that makes no attempt represent knowledge as a vast set of rules as Symbolic AI does nor attempt to reach general intelligence through emulation of the human brain like Connectionist AI, is already happening. [iii] In fact, in the above dialog that we used illustrate how language articulation and comprehension work, Helen is, in fact, a program based on this new technology.

[ii] “Why GPT-3 is the best and the worst of AI right now,” by Douglas Heaven in the MIT Technology Review.