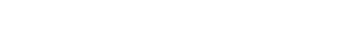

Sam Altman Is “Horribly Wrong” about AI (Podcast)

Sam Altman’s comments on AI potentially going horribly wrong are misguided. AI has already achieved this state and there’s no going back.

In this episode of the Synthetic Knowledge Podcast, New Sapience Founder and CEO, Bryant Cruse, discusses how Sam Altman’s version of AI (LLM chatbots, ChatGPT respectively) has triggered a cascade of societal disruptions. Further, he discusses how to get the industry, and world, back on track with AI that doesn’t cause these social harms, one that New Sapience has pioneered.

Yann LeCun’s Open Source and AGI Risk (Podcast)

Meta’s AI Chief Yann LeCun was recently awarded the TIME100 Impact Award for his contributions to the world of artificial intelligence.

In this episode of the Synthetic Knowledge Podcast, New Sapience Founder and CEO, Bryant Cruse, discusses Yann’s comments about the prospect of machine learning achieving artificial general intelligence in any capacity. Further, Bryant touches on the risks of open sourcing AI’s and whether AGI is an existential threat or not.

The Myth of GenAI Productivity (Podcast)

Is GenAI really going to cause unrestricted automation? Or is this more fluff than truth?

In this episode of the Synthetic Knowledge Podcast, New Sapience Founder and CEO, Bryant Cruse, discusses the use of GenAI in business automation, how it is a recipe for mediocrity, and why businesses may be making a mistake turning to these technologies.

The Taylor Swift AI Situation (Podcast)

What happened recently with Taylor Swift? What does this have to do with AI? And should OpenAI and Microsoft apologize to the celebrity?

In this episode of the Synthetic Knowledge Podcast, New Sapience Founder and CEO, Bryant Cruse, discusses what the Taylor Swift controversy was and is, how the inactions and reactions of Big Tech were subpar, and more broadly, why the release of ChatGPT in 2022 was essentially opening Pandora’s box.

Examining OpenAI’s Definition of AGI (Podcast)

What is Artificial General Intelligence, or AGI? Why did OpenAI change their set of core values and redefine what achieving AGI means?

In this episode of the Synthetic Knowledge Podcast, New Sapience Founder and CEO, Bryant Cruse discusses what AGI is, how OpenAI’s definition of AGI doesn’t make sense, why language models will never achieve AGI, and most importantly, what will.

AI Accelerationists vs The Doomers (Podcast)

What is effective altruism and effective accelerationism? Why do they matter for the field of artificial intelligence? And why are these ideologies so detrimental to progress in the industry?

In this episode of the Synthetic Knowledge Podcast, New Sapience Founder and CEO, Bryant Cruse, and Director of Communications, Ayush Prakash, discuss whether LLMs understand language or not, and responds to Geoffrey Hinton’s and Yann LeCun’s claims.

On LLMs Understanding: A Response To Gary Marcus’ Article (Podcast)

In this episode of the Synthetic Knowledge Podcast, New Sapience Founder and CEO, Bryant Cruse, and Director of Communications, Ayush Prakash, discuss whether LLMs understand language or not, and responds to Geoffrey Hinton’s and Yann LeCun’s claims.

What’s Going On With OpenAI and Sam Altman? (Podcast)

In this episode of the Synthetic Knowledge Podcast, New Sapience Founder and CEO, Bryant Cruse, and Director of Communications, Ayush Prakash, discuss what has been going on over this past weekend involving Sam Altman and OpenAI, the company that launched ChatGPT in November 2022.

What Would A Science of AI Look Like? (Podcast)

In this episode of the Synthetic Knowledge Podcast, New Sapience Founder and CEO, Bryant Cruse, and Director of Communications, Ayush Prakash, discuss what a science of AI would look like, and Bryant even describes a theory of intelligence.

Why Can’t Big Tech Do What New Sapience Is Doing? (Podcast)

The second episode of the Synthetic Knowledge Podcast.

This episode’s question: Why can’t Big Tech do what New Sapience is doing?